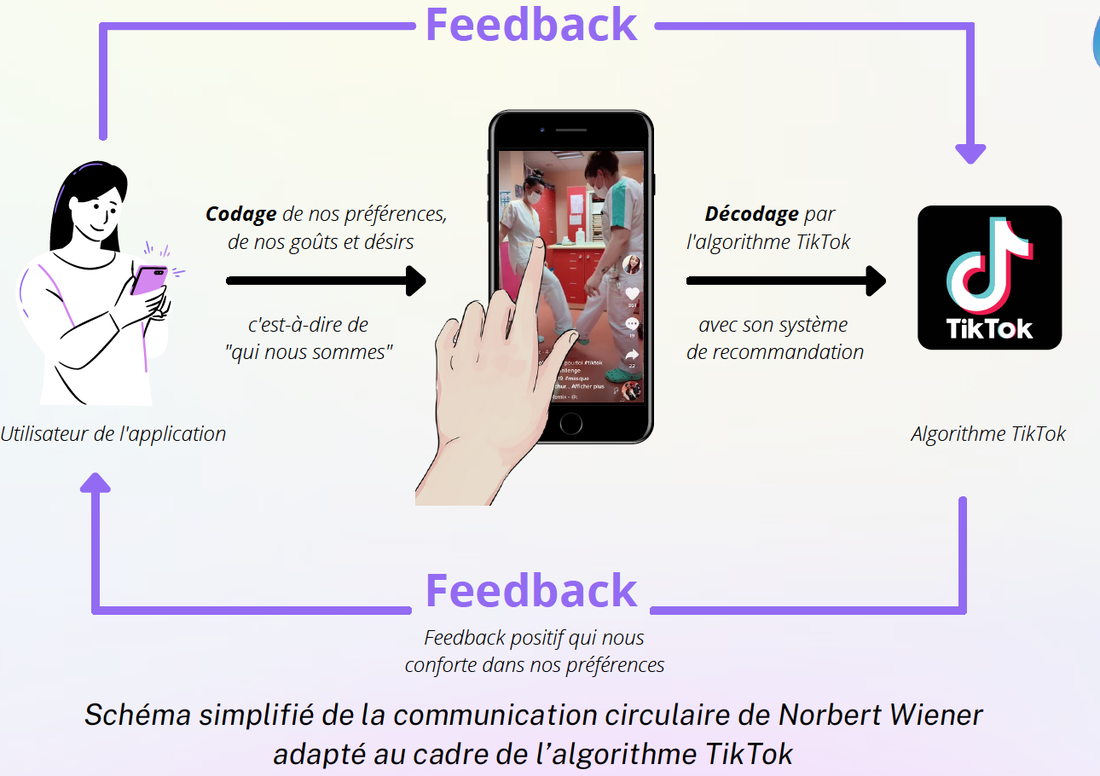

A Wall Street poll showed that when a user is profiled, TikTok’s algorithm will reinforce the traits linked to the profile by providing the user only contents with those traits. As its algorithm learns from users’ actions, the application offers less and less varied content thus enhancing the filter bubble effect which can put vulnerable people at risk. Indeed, the repetition and glorification of dangerous habits such as grooming, trolling, hyper sexualisation, eating disorders, depression, self harming or any other illness can make people believe that they are trendy and worthy of emulation. In this way, a user profiled as being interested in sadness and depression will be made even sadder and more depressed because TikTok's algorithm will push increasingly shocking and depressing videos to this user in order to retain his/her attention. |

TikTok is one of the most addictive social media platforms. This exhibit aimed to raise awareness about its addictive nature and to bring visitors to ask questions like “Why does the algorithm only show us certain videos and not others? How does the algorithm choose what we might like? Why is it difficult to get out of our "bubble"? How does the algorithm sort the videos on the platform? Which ones deserve to be in the "For You" and why? Can this algorithm hurt us? This has led to suicide, self harming and to other health issues. TikTok's algorithm can be likened to a "being" because of its capacity to analyse and to learn. However, being a machine, its capabilities well exceed those of a human. Is the TikTok's algorithm a humanoid?

Published in 1964, God and Golem is based on a series of lectures that Norbert Wiener gave at Yale University in 1962. In it, Wiener talked of his fears that technology will one day replace humans. This is no longer just a fear but a reality given that AI is taking over entire sectors of human agency. The Cyber (Tiktok) team

|